Guardians of the Screen: Mastering YouTube Kids to Ensure Digital Safety and Learning for the Next Generation

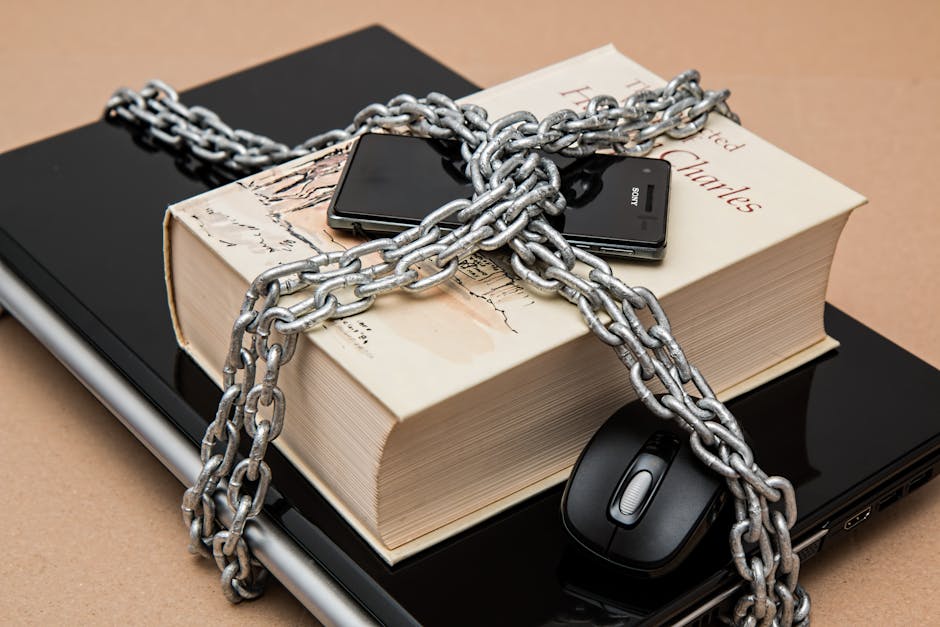

We live in a world where tablets often serve as the first connection point to global content. But as international students—whether you are future parents, educators, or tech innovators—we need to talk about the digital sandbox our younger siblings or future children play in. YouTube Kids promises a safe haven designed specifically for curated exploration, but how solid is that shield? Here's the deal: Understanding this app isn't just about setting screen limits; it’s about controlling the input that shapes young, critical minds and ensuring their digital exploration is genuinely beneficial.

The Algorithmic Tug-of-War: Analyzing YouTube Kids’ Content Curation Model

Recently, a friend (a young Millennial parent struggling with balancing remote work and childcare) asked me for the definitive, non-marketing-copy review of YouTube Kids. This became my analysis project. Situation: They needed a trustworthy platform, but were terrified of the notorious 'Elsagate' content and low-quality, repetitive videos. Task: My goal was to move beyond the advertising claims and analyze the real-world performance of the content filters—specifically comparing the broad 'Explore' mode against the restrictive 'Approved Content Only' setting.

Action: I spent a week analyzing the app's structure: identifying how the automated filters categorize content (pre-vetted categories like Learning, Music, and Gaming), and stress-testing the search functionality for borderline or inappropriate terms. The key action was understanding the *why* behind certain content removal—often based on mass user flagging and subsequent human review, rather than pure, infallible AI detection. Result: The positive outcome was realizing that while the default settings sometimes allow adjacent, concerning content (videos that are technically safe but poorly produced or overly commercial), the manual 'Approved Content Only' setting transforms the app into a genuinely curated tool. It proves that technology requires active human oversight to be truly safe and educational. Don't miss this: The application is only as safe as the parental settings utilized, demanding hands-on setup.

Risk Management 101: Essential Controls for Digital Guardian Angels

While YouTube Kids is undoubtedly an improvement over unrestricted access to main YouTube, skepticism is always warranted. The system relies heavily on categorization tags, which can be manipulated or misinterpreted by creators seeking views. Technologically, users must be taught to utilize three crucial controls. First, the Timer function acts as a non-negotiable throttle on screen time. Second, the Block feature must be used diligently to permanently remove specific channels or videos that slip through. And critically, parents must regularly view the child's Watch History via the parental dashboard.

This level of active technical engagement mitigates the high risk of algorithmic drift towards low-quality, repetitive, or outright strange content that occasionally breaches the safety nets. This approach shifts control from a distant, imperfect algorithm back to the supervising adult. Delegating screen time control entirely to an automated system is a gamble; remember to be the final firewall in your family’s digital life. We must empower the next generation with smart tech usage, and that starts with critical parental management.

SUMMARY & CONCLUSION

The journey into YouTube Kids reveals a critical truth: digital safety is an active, ongoing process, not a static product feature. While the app provides a highly beneficial framework for safe content exploration, its effectiveness hinges entirely on the diligence of the user. Utilize the strict parental controls, scrutinize the watch history, and approach the app not as a passive babysitter, but as a specialized library that requires careful and consistent cataloging.